Cost blowups make good stories. Cost drift is what actually eats your budget.

Drift comes from normal, boring behavior:

A team enables diagnostics “for a week” and forgets.

Autoscale does what it’s designed to do, then never scales back down.

Backups and snapshots pile up like receipts in a junk drawer.

A new service rolls out under a shared subscription, and nobody notices the new meter until Finance asks.

Here’s the uncomfortable part: drift isn’t a tooling problem. It’s an attention problem.

So we fix attention first.

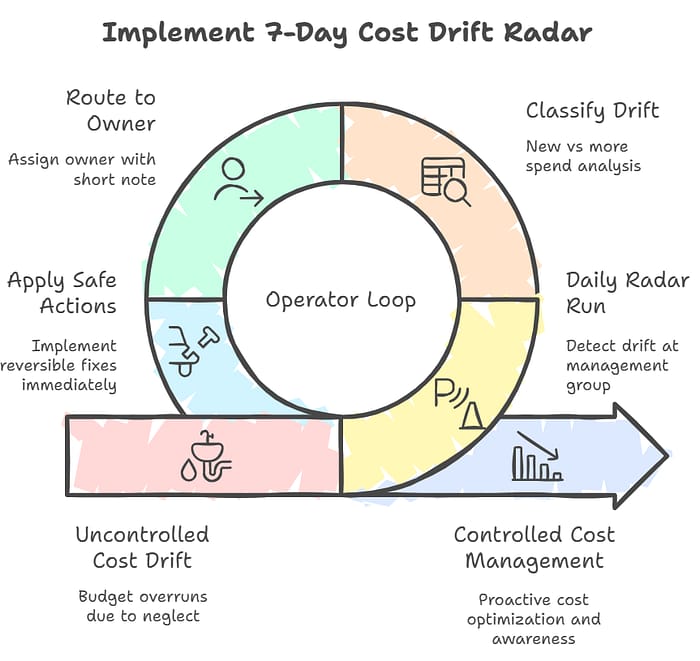

The operator loop (what you do daily)

This is a 10 to 15-minute daily loop, Monday through Friday. No heroics. No war room.

Goal: Detect drift at the management group scope, route it to a real owner, apply the safest action first, then file the “risky” work for change control.

Source of truth (best practice stack):

Cost exports (daily) for numbers you can trust and automate against

Azure Resource Graph for “what resources changed or exist right now”

KQL for “why did logs or telemetry explode”

Lightweight alerting to email/Teams when something moves outside your tolerance band

If you want a mental model: this is a smoke detector, not a fire investigation.

The 7-day radar checklist (daily signals)

Run these in order. Top-down only. You don’t start at the resource group unless you like suffering.

1) “What moved?” (Azure Management Group scope)

Compare the last 7 days vs the prior 7 days at the management group scope.

Pull the top 3 subscriptions contributing to the change.

Operator rule: if it isn’t a top 3 mover, it doesn’t exist today.

2) “Is it new spend or more spend?”

New spend: new Azure resource group, new service, new region, new SKU, new workload.

More spend: same workload, higher usage, bigger scale, more retention, more throughput.

3) “Top service drivers”

Look for a service jumping into your top 5 that normally isn’t there:

Logs / telemetry ingestion

Data egress

Containers scaling (AKS/VMSS)

Database compute or storage growth

Backup and snapshot growth

4) “Orphans and barnacles”

This is the easiest money you’ll ever save:

Unattached disks

Old snapshots

Idle public IPs

Load balancers with no backend

Forgotten dev/test resources that never shut off

5) “Scale changed”

Scan for sudden shifts in:

AKS node counts

VMSS instances

App Service plan size or instance count

Database tier or vCore changes

6) “Logging and retention drift”

This is the silent killer because it feels harmless.

Retention bumped up “temporarily”

Debug logging left on

Diagnostic settings pushed broadly without a baseline

One noisy workload flooding ingestion

7) “Owner and tag integrity”

You can’t route drift without an owner.

Does the spend map to an Owner, App, and Environment?

If not, your radar still works, but your response becomes a Slack scavenger hunt.

Ownership model (who does what)

This only works when roles are boring and explicit.

FinOps (signals + triage)

Runs the daily radar

Classifies drift (new vs more, service driver, likely cause)

Routes to an owner with a short note: “what moved, where, and why we think”

Platform engineering (guardrails + safe actions)

Owns shared settings that cause drift (diagnostics defaults, retention baselines, shared services patterns)

Implements safe automation (tag enforcement, dev/test schedules, guardrails)

Keeps a “known cost traps” list that teams can actually follow

App owners (intent + workload fixes)

Confirms if the spend is expected

Owns app-level changes (scaling rules, query tuning, caching, architecture)

Change control (only for risky moves)

Anything that can impact availability gets a ticket + change window

Reversible changes happen first, always

Action playbook (fixes that don’t break prod)

Start with what’s reversible. Save the “big brain” changes for when you have daylight.

Tier 1: Safe and reversible

Delete unattached disks, old snapshots, idle IPs

Reduce log retention where it’s clearly excessive for the environment

Turn off debug logs that were meant to be temporary

Apply dev/test shutdown schedules (policy-backed)

Tier 2: Low risk, but get a quick sign-off

Right-size down one step (not three)

Tighten autoscale rules to prevent runaway growth

Split noisy logs or reduce verbosity

Tier 3: Real engineering work

Fix chatty systems driving egress

Optimize database queries and indexes

Rework telemetry patterns to reduce ingestion without losing signal

Operator rule: never start with the riskiest fix just because it’s the biggest bar on the chart.

Copy/paste checklist block (reuse this every issue)

7-Day Cost Drift Radar (Daily)

Azure Management Group scope: last 7 days vs prior 7 days trend

Top 3 subscription movers identified

Top 3 Azure Resource Group movers identified inside each subscription

Classify: new spend vs more spend

Check orphans (disks, snapshots, IPs)

Check scale changes (AKS/VMSS/App Service/DB tiers)

Check logs + retention drift

Confirm tags: Owner, App, Environment

Route: assign platform/app owner with a 2-sentence summary

Apply Tier 1 fixes today, queue Tier 2/3 via ticket + change window

The next 15 minutes (do this today)

Pick one management group and run the 7-day comparison.

Write down the top 3 movers and label each as new or more.

Assign an owner for each mover, even if the owner is “unknown” for now. Unknown is still a status.

Apply one Tier 1 fix. Just one. Prove the loop works.